Artificial Intelligence- By Purusha Shirvani

We often think about ourselves as living in a “modern world,” imagining the twenty-first century as the height of human civilization to date, with advances in all forms of technology, ranging from healthcare, medicine, prevention, and detection to every area like recreation, forensics, transportation, and in almost every other aspect of our lives.

This was all accomplished by what we like to proudly call our “superior intellect” to the other inhabitants of this planet. What some have begun worrying about throughout the last century, however, is that one day we might NOT be the most intelligent species on Earth. This would make us unable to rule this land the way we have been doing for millennia. This perceived threat is usually associated with fears that our creations might be the ones accelerating our way to this demise. This would make our improvement and advances being a double-edged blade.

Double Edged Blade

———————-

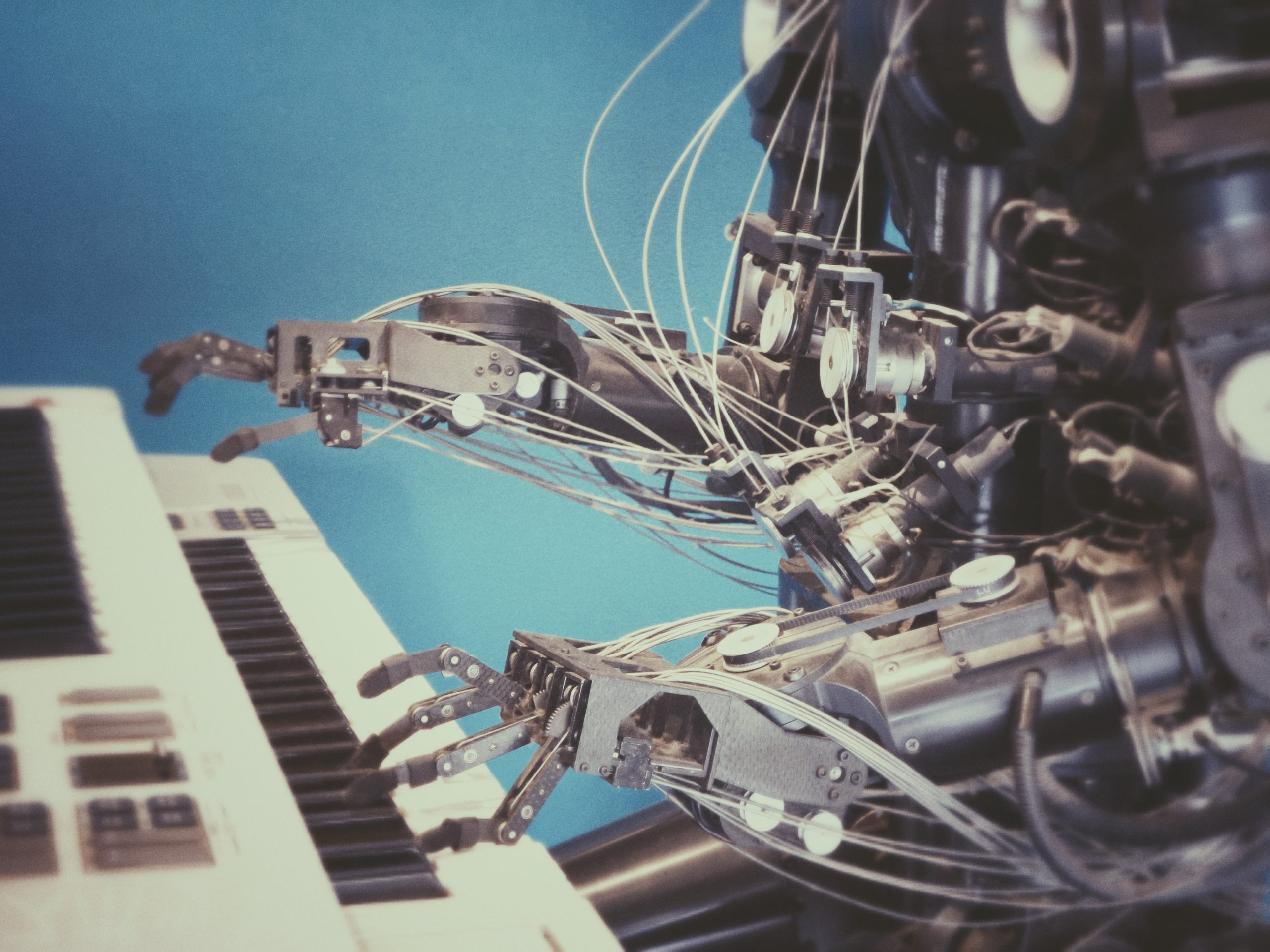

This double-edged blade can take many forms, but one of the most famous examples is Artificial Intelligence. An often misused label for functions that would otherwise require human intelligence is also confused with machine learning. Machine learning is a subset of artificial intelligence that involves learning and improving by itself. Ever since the introduction of ENIAC (one of the first computers, it stood for Electronic Numerical Integrator and Computer ), fears of the machines one day overtaking us grew and were often expressed in the form of stories, novels, and sometimes conspiracy theories

However, unlike what some of us think, the fear is not wholly baseless, nor is it “far off on the horizon.” Stephen Hawking once said that artificial intelligence could be the “worst event in the history of our civilization.” He also urged creators to “employ best practice and effective management.” Turing has proven that it’s logically impossible to know if every program reaches a conclusion and halts; or if it will loop forever trying to find a solution.*

That means that simple rules such as “minimize human suffering” or “never harm humans” could have unknown consequences or direct the AI in a way we could never have predicted. This restriction would, in effect, make containment algorithms that seek to do precisely that unusable. A super-intelligent AI with unknown or greater than human capacity would thus be unpredictable and uncontrollable by extension.

Kind of scary, but it’s still a long ways off, right?